The ultimate guide to Java Security Vulnerabilities (CVE)

I’ve spent a large part of the past two years working on investigating, verifying, patching, and updating large JVM-based enterprise codebases. It was not fun. My goal here is to create a comprehensive resource on the topic so that everyone who is faced with similar challenges can draw from this and save some time/effort.

The basics

Every software product consists of code. Code can have vulnerabilities. Because no one writes code from scratch these days, we all use (mostly) open-source libraries. When a new vulnerability is discovered and verified, it’s added to the Mitre and/or NIST CVE database and assigned an ID like CVE-2022-42004.

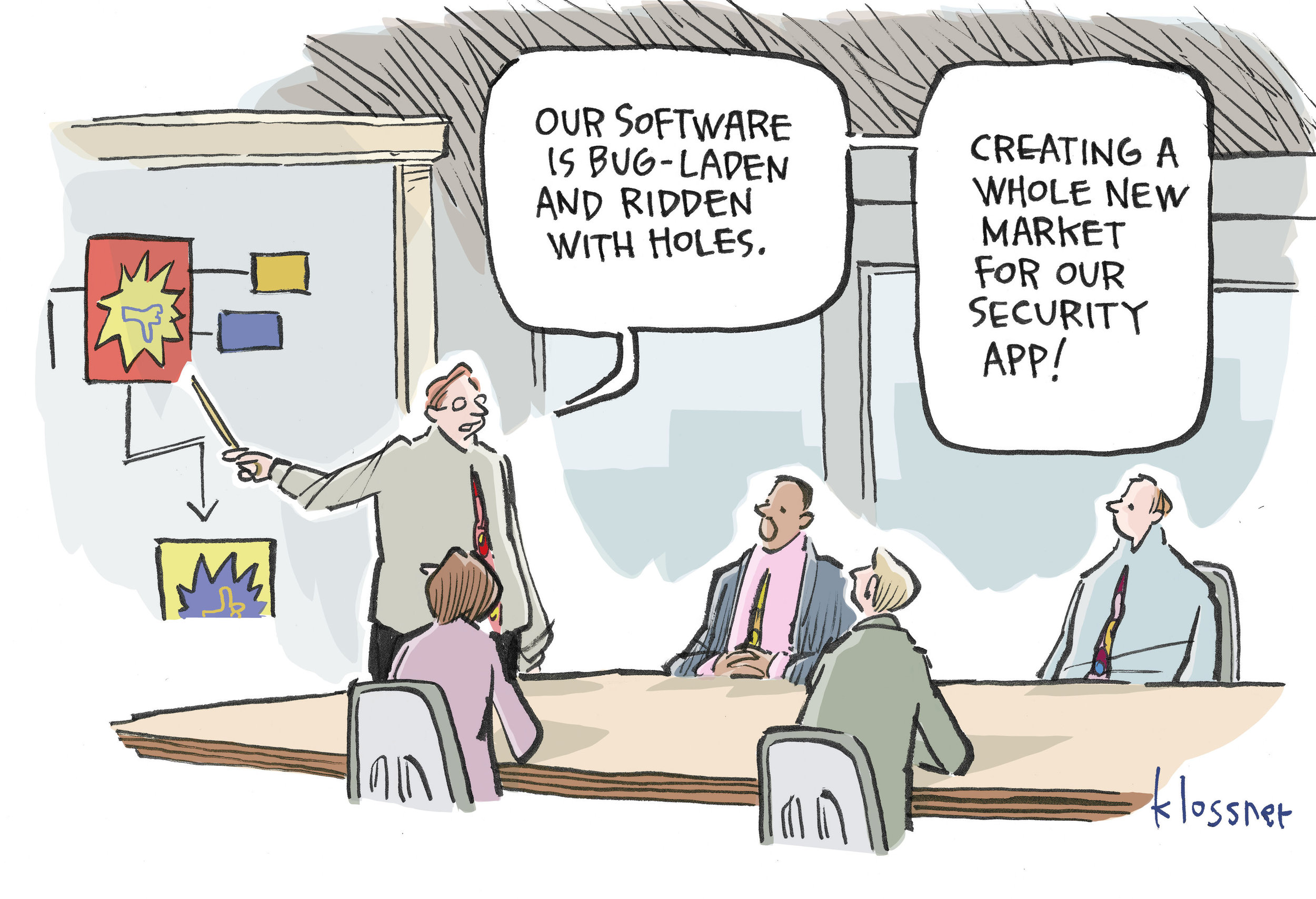

Back in the older days, this wasn’t such a big deal - we didn’t have as much software running in the cloud, and a lot of software was hidden inside company networks, so the attack surface was fairly limited. Nowadays, especially since Log4Shell, which affected half the internet, every other company is worried that their software might be exposed. This is especially true for large entities, with potential government oversight/strict regulations (not to mention GDPR and similar laws).

Even if you’re not working for a large company with a massive legacy codebase, that doesn’t mean you’re off the hook - chances are, the clients of your company are big corporations. These tend to prefer on-premise solutions for various reasons, and they do scan for CVEs every software package that they’re installing in their systems. The sales department complaining about not being able to expand to new customers because our codebase has too many CVEs is a real thing.

TL;DR is that we all have to worry about it sooner or later.

So with that said, let’s say that you’re an engineer and a manager comes your way shouting “We gotta get our CVEs under control!”. That brings us to Part 1, the scan tooling.

Part 1: The CVE scan tooling

We have to start with establishing what CVEs we have in our codebase. This sounds simple but is not that straightforward. I’ve tried out plenty of tools, here’s a brief breakdown:

Veracode

Absolute garbage. Avoid at all costs. I might be unfair in my judgment since all that I know about it is that scans everything but the kitchen sink, takes almost 24h hours to complete on our systems, and generates a pdf report of 100+ pages of little to no value. I still don’t know what exact value it’s supposed to bring, but from an engineer’s perspective, it’s far from a useful tool in this context. Judging by their website, it should be on par with other tools, but to an extent that I’ve interacted with it - no thanks.

Snyk, Apiiro

Both of these tools are decent with tons of options, provide useful graphs, work fairly quickly, and are generally nice to use. There’s just one problem - they don’t work correctly with Maven dependencies. This turned out to be an absolute pain in the butt for our team. Having dashboards, fancy graphs and charts is nice, but having to deal with false positives is extremely tedious. I’ll elaborate more on this once we get to the details of addressing CVEs.

I recommend using them for analyzing non-Java dependencies (npm, system-level, etc.), but for Java, it’s a no-go

Trivy

It’s the holy grail of security scanning, at least for Java. It’s just a CLI tool for scanning docker images, however, it can also scan file system contents. In further parts, I’ll be explaining how to use it effectively.

I did notice that it does not detect CVEs from bundled JS (once you build your frontend project and bundle it into a Docker image), so if you want to focus on that as well Snyk/Apiiro might be a better tool.

DependencyCheck

I never came across it, however people have pointed out to me that it definitely should be on the list.

Part 2: The workflow

I’ve called this article a guide to “Java Security Vulnerabilities”, however, I want to clarify that almost all of the content here applies to all JVM languages - be it Java, Scala, Kotlin, Groovy, etc. All of the JVM worlds runs on Maven repositories. Even if you’re using Gradle/sbt/etc as your build tool, you’re still using Maven repositories. This guide will focus on Gradle, but equivalent tooling exists in almost every build tool.

Let’s assume that our scan result shows that we have one or more occurrences of cve-2021-27568 that need to be addressed. Usually, the CVE description is very explicit as to which libraries are affected. In this case, as described in the CVE:

netplex json-smart-v1 through 2015-10-23 and json-smart-v2 through 2.4 are affected versions.

To start with, let’s have a look at what versions are available, that is let’s check if there’s even a version that’s already patched, by searching mvnrepository/mavencentral.

Thankfully there is a patched one, looks like we need 2.4.4^, great. Now let’s see why it’s in our classpath in the first place:

./gradlew dependencies > deps

This will generate a full list of dependencies of our project. There are equivalent commands for Maven or sbt, you get the idea. If we have an explicit dependency in one of our build.gradles it’s pretty straightforward. But that’s usually not the case. Once we open that deps file we can see a tree-like structure:

compileClasspath - Compile classpath for source set 'main'.

+--- org.scala-lang:scala-library:2.11.12

+--- org.slf4j:slf4j-api:1.7.32

+--- project :shared:logging

| +--- org.apache.logging.log4j:log4j-slf4j-impl:2.17.2

| | +--- org.slf4j:slf4j-api:1.7.25 -> 1.7.32

| | \--- org.apache.logging.log4j:log4j-api:2.17.2

| +--- org.apache.logging.log4j:log4j-core:2.17.2

| | \--- org.apache.logging.log4j:log4j-api:2.17.2

| +--- org.apache.httpcomponents:httpclient:4.5.2 -> 4.5.9

| | +--- org.apache.httpcomponents:httpcore:4.4.11

| | +--- commons-logging:commons-logging:1.2

| | \--- commons-codec:commons-codec:1.11

| \--- javax.servlet:javax.servlet-api:3.1.0

What we have here is a list of all transitive dependencies of a given project. What this chunk tells us is as follows:

- There’s a compile dependency on a module that’s called

logging, which has a direct dependency onorg.apache.logging.log4j:log4j-slf4j-impl:2.17.2, which has a dependency onorg.slf4j:slf4j-api:1.7.25. All of these jars will be downloaded and included in our production bundle.

That arrow at org.slf4j:slf4j-api:1.7.25 -> 1.7.32 tells us that although this library depends on 1.7.25, 1.7.32 is also on our classpath, so 1.7.25 is evicted. An evicted dependency means that it has been pushed out by a newer version and that the newer version is used instead. I won’t go into too much detail here, because Maven dependency management is a fairly broad topic, you can learn more here.

Let’s look at a chunk of real dependency report and try to get rid of that json-smart problem:

| | | | +--- project :shared:custom-msal-lib

| | | | | \--- com.microsoft.azure:msal4j:1.10.1

| | | | | +--- com.nimbusds:oauth2-oidc-sdk:9.7

| | | | | | +--- com.github.stephenc.jcip:jcip-annotations:1.0-1

| | | | | | +--- com.nimbusds:content-type:2.1

| | | | | | +--- net.minidev:json-smart:[1.3.3,2.4.7] -> 2.3

Looks like our gradle submodule called custom-msal-lib depends on com.nimbusds:oauth2-oidc-sdk:9.7, which is pulling in a json-smart:2.3 library. That bracketed list [1.3.3,2.4.7] means that the author of the library has taken an effort to list the compatible versions. This is rarely the case. This example here tells us that we’re currently using 2.3.

Here’s what the content of the build.gradle of custom-msla-lib looks like:

dependencies {

compile "com.microsoft.azure:msal4j:1.10.1"

}

Super straightforward. Now we’ve got two options here:

- If a newer version of

msal4jexists that doesn’t have thatjson-smartdependency (or at least it depends on a version that is no longer affected), we can just upgrade the library itself. - If there isn’t a newer version out there, we can add an explicit dependency just for

json-smart. Let’s go with that route:

dependencies {

compile "com.microsoft.azure:msal4j:1.10.1"

compile 'net.minidev:json-smart:2.4.8'

}

Once we re-run the scan:

| | | | +--- project :shared:custom-msal-lib

| | | | | +--- com.microsoft.azure:msal4j:1.10.1

| | | | | | +--- com.nimbusds:oauth2-oidc-sdk:9.7

| | | | | | | +--- com.github.stephenc.jcip:jcip-annotations:1.0-1

| | | | | | | +--- com.nimbusds:content-type:2.1

| | | | | | | +--- net.minidev:json-smart:[1.3.3,2.4.7] -> 2.4.8

Perfect! We’re all done here. Rinse & repeat until you replace all the occurrences that can be found in the dependency tree.

You might have noticed that we’ve upgraded to a version that’s newer than the one that’s listed as officially supported. That’s a perfectly fine thing to do, as 90% of the time we don’t have that information. If we patch too far and cause some incompatibilities, we need to start rolling back to find a version that still works but does address our CVE.

Part 3: The problems

Snyk and Apiiro

As shown in the json-smart example, we’ve gotten rid of the vulnerable dependency, and we can verify that by running a dependency report from our build tool. Trivy will also correctly identify that we’re no longer including a compromised jar. Unfortunately, that’s not the case for Snyk and Apiiro (and probably a bunch of other tools).

Snyk and Apiiro are static scanners - which means that they just parse the declaration level of a build.gradle, not the actual dependency tree. In simple terms, they’re too dumb to build the dependency tree and look at what’s actually being bundled into our app. All they do is look at the top-level dependency, cross-check it with the CVE databases and if they find that this version is vulnerable, they will flag it.

So if your codebase contains a lot of CVE fixes that come from evicting versions, you will get a ton of false positives from these tools. Trivy does not have that problem, as it looks at the jars that are physically present in an archive, not at what’s declared in our build tools.

Modules

In small codebases, we usually don’t have a ton of modules. Unfortunately, sometimes some people like to module the living shit out of their projects, which results in a hellish dependency graph. As you can probably guess, that has been the case for me lately. It gets worse when you introduce cycles and other messy relations between modules. Fortunately, there is a simple strategy to simplify the situation.

You need to identify the module(s) which are responsible for assembling the final archive during your build process are run the scanning there. You don’t need to analyze each module, just focus on the ones which are relevant in the final step of the build process. In my case:

./gradlew :project:assemble:dependencies > deps

This will show you the tree of what’s being bundled in the final archive.

…Unless you have some more absolutely-necessary-custom-logic in your build codebase, like manually copying jars into the final bundle from yet another location. That of course will not be picked up during the build tool scanning. Thankfully, Trivy will still notice it.

Part 4: The really big problems

Classpath issues

Your codebase might contain stuff like spawning subprocesses and passing in the classpath dynamically for those processes. As an example:

Your app A runs another app B with a classpath that’s defined in a different module inside A’s project structure.

This is quite a… peculiar design choice to make, but that’s a topic for another time. Any kind of dynamic classpath manipulation is very dangerous when upgrading dependencies because you might not find out about broken dependencies until runtime hits you with a ClassNotFoundException. It can get even worse, as libraries might be incompatible with one another leading to a fatal situation:

- Library X only works with library Y until version 2.3.4

- Library Z only works with library Y from version 2.3.4

If for some reason you’re unable to bump X/Z (i.e. the cost of upgrading that would be too high), you’re screwed. I’ve almost encountered this with org.apache.hadoop:hadoop-azure:

3.2.3breaks a large part of the codebase and requires a massive rewrite3.2.1is still vulnerable- luckily,

3.2.2was good enough for both to work but that’s a very thin margin

Jackson and the DDOS of hell

Jackson, the serialization library on which almost all of Java software depends. When we upgraded from 2.11.4 to 2.13.3, all hell broke loose. It was probably the funniest and most annoying problem I’ve seen to date - after the upgrade, only in some production environments, as soon as some accounts logged in, the system was DDOSing itself until it died. This one is a topic for an entire blog post of its own. After far too long, we’ve identified an issue with Jackson serialization. Turns out, that as we’ve done that upgrade, the serialization of boolean parameters has changed.

A DTO that was accessed on the frontend had a boolean property that was referenced as isAdd. This reference was now returning null, and add should have been used instead. In a codebase written in large before Typescript had ever existed, this is one hell of a problem to have.

I’ve cursed a lot at the Jackson team for introducing such a fundamental change to a library this popular, so much so that I couldn’t believe they’d made that decision consciously. And that’s most likely true - this change is nowhere to be found in their release notes. Between when this has been discovered and now, the issue has been moved from jackson-databind to jackson-module-kotlin. So it wasn’t caused by Jackson itself, but by the fact that jackson-module-kotlin is on the classpath. We didn’t touch that library with any of the upgrades, yet it resulted in weeks of tedious whack-a-mole.

Shaded dependencies

As we’re talking Jackson, there’s one more niche problem I need to mention. Some libraries include shaded dependencies in their bundles. A very good explanation can be found here. What this means is that a library A can bundle an entire library B as part of its codebase, not as a dependency expressed via pom.xml (as is usually the case). This is bad because we can’t upgrade that shaded dependency. On one hand, this will most likely not be detected by any of the CVE scanners (including Trivy). On another, you’re still affected by whatever CVEs that library is causing. If the parent library does not have a patched version compatible with your codebase, you’re out of luck. The best you can do is fork the library and try upgrading it on your own.

Estimating CVE work

I hope that by this point you can see all the intricacies and nuances of working with CVEs. Estimating any “normal” software development work is hard, to begin with. Estimating CVE patching is next to impossible. That’s an answer that no manager is going to accept, so here are some hints on how to approach the estimation:

- This line of work is very heavily impacted by the know-how of your team. How well do you know the codebase? How many libraries are used in total? How complex is it?

- Integration and e2e test coverage are key to detecting issues early. Unit tests are far less useful, so the more IT/e2e tests you have, the better.

- Compile a list of CVEs to address and rank them by potential impact/difficulty. A protobuf/jackson/spark upgrade will most likely be far more severe than bumping an email client.

- Propose percentile reduction of CVEs in batches, not specific CVEs that will be addressed if possible. If you hit a roadblock, move on to the next one and come back later. As I’ve mentioned, you might encounter some CVEs which are next to impossible to patch. The higher-ups need to be aware of that. There will probably be exceptions to that (no one will let Log4Shell pass), but you need to make sure that the difficulty of the task is well understood across the company. Agree to reduce CVE count by X% in the next release, Y% in the next one, etc.

- Last but not least, automate.

Part 5: Automation to the rescue

As any good doctor will tell you, it’s far better to prevent issues than to cure them. CVE scanning should be a part of your CI pipeline from day 1. It’s trivial to set it up nowadays with Github Actions and similar CI tools. For new projects, I recommend failing your CI scan job if any high/critical CVE is detected (super easy to do with Trivy). We could fail the entire pipeline, however, we have CVEs coming in every day which may not be patched in a stable version for a while.

Do your best to address all high/critical ones as you go along. Use only stable versions. Produce a report with each build. Here’s a sample CI scan job I’ve written for Gitlab CI a while back:

test:cve-scanning:

stage: test

image: docker:18-git

services:

- docker:18-dind

variables:

DOCKER_IMAGE: $DOCKER_IMAGE_REPOSITORY:$CI_COMMIT_SHA

FILENAME: cve-report-xxx-$CI_COMMIT_TIMESTAMP

before_script:

- export TRIVY_VERSION=$(wget -qO - "https://api.github.com/repos/aquasecurity/trivy/releases/latest" | grep '"tag_name":' | sed -E 's/.*"v([^"]+)".*/\1/')

- echo $TRIVY_VERSION

- wget --no-verbose https://github.com/aquasecurity/trivy/releases/download/v${TRIVY_VERSION}/trivy_${TRIVY_VERSION}_Linux-64bit.tar.gz -O - | tar -zxvf -

- mkdir ~/.docker && echo "$DOCKER_AUTH_CONFIG" > ~/.docker/config.json

allow_failure: true

script:

# Build image

- docker build --pull --no-cache -f docker/xxx/yyy/Dockerfile -t $DOCKER_IMAGE .

# Build report

- ./trivy image --exit-code 0 --no-progress -o $FILENAME $DOCKER_IMAGE

# Print report

- ./trivy image --exit-code 0 --no-progress $DOCKER_IMAGE

cache:

paths:

- .trivycache/

artifacts:

paths:

- $FILENAME

The Docker build here is a duplicate of a previous step, but it’s far easier to just rebuild it than the alternatives. If you’re not producing docker image but installable packages, you can still use Trivy with a filesystem mode and some unpacking tool (ex. rpm2cpio for RPM files):

Here’s another sample taken from Teamcity Kotlin DSL (avoid Teamcity at all cost if possible):

steps {

script {

name = "Download Trivy"

scriptContent = """

wget --no-verbose https://github.com/aquasecurity/trivy/releases/download/v${trivyVersion}/trivy_${trivyVersion}_Linux-64bit.tar.gz -O - | tar -zxvf -

""".trimIndent()

}

script {

name = "Extract all RPMs"

scriptContent = """

cd $directory

ls -al

find . -name "*.rpm" -exec rpm2cpio {} \; | cpio -idmv

""".trimIndent()

}

script {

name = "Trivy scan"

scriptContent = """

./trivy rootfs --severity HIGH,CRITICAL --security-checks vuln --format json $directory > $jobName.json

""".trimIndent()

}

}

Summary

I hope you will find this helpful. Even though CVE patching isn’t what most programmers would want to work on, it does have its benefits. Solving problems like the ones I’ve described above improves your overall knowledge and seniority far more than implementing yet another CRUD.

So even if you find yourself banging your head against a wall at 2 am, there is tons of value and, ultimately, satisfaction in solving such hard problems. Even if they’re tedious. With that said, let’s hope we all get to experience challenges different than CVE patching. Best of luck!